※ 본 글은 아래의 예제을 test하며, 작성한 글입니다.

GitHub - tensorflow/tensorrt: TensorFlow/TensorRT integration

TensorFlow/TensorRT integration. Contribute to tensorflow/tensorrt development by creating an account on GitHub.

github.com

1. Env.

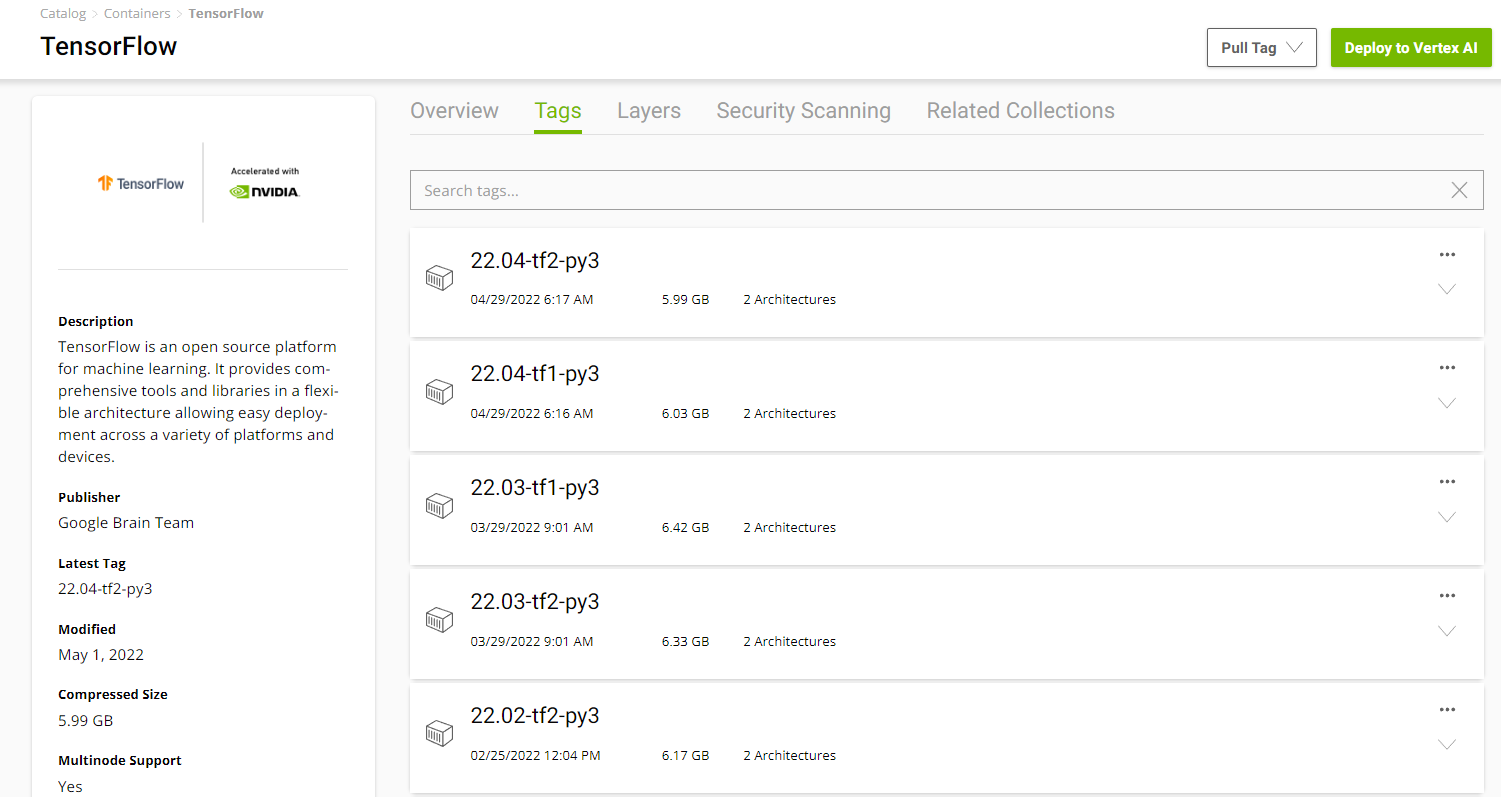

아래의 Docker를 사용하였습니다.

(colab과 여러 환경에서 테스트를 해보았지만, 역시 docker에서 제일 오류없이 잘 실행됩니다.)

혹시 docker에 대하여 모르신다면, 아래의 글을 참고하세요.

https://jstar0525.tistory.com/202

[Docker] Deep Learning 연구 개발 환경 setting

내가 사용하는 딥러닝 개발 환경에 대하여 서술하고자 한다. Deep Learning과 관련하여 우리 연구실에서 다수의 연구원들이 개별 PC에서 서버로 ssh를 통하여 접속하고 GPU들을 사용을 한다. 지금까지

jstar0525.tistory.com

If you have Docker 19.03 or later, a typical command to launch the container is:

$ docker run --gpus all -it --rm nvcr.io/nvidia/tensorflow:22.04-tf2-py3

https://catalog.ngc.nvidia.com/orgs/nvidia/containers/tensorflow

TensorFlow | NVIDIA NGC

TensorFlow is an open source platform for machine learning. It provides comprehensive tools and libraries in a flexible architecture allowing easy deployment across a variety of platforms and devices.

catalog.ngc.nvidia.com

2. Check GPU

# nvidia-smi

Sun May 1 13:22:21 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 510.54 Driver Version: 510.54 CUDA Version: 11.6 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:05:00.0 Off | N/A |

| 22% 31C P8 15W / 250W | 17MiB / 12288MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 NVIDIA TITAN X ... Off | 00000000:06:00.0 Off | N/A |

| 23% 25C P8 8W / 250W | 6MiB / 12288MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 NVIDIA TITAN X ... Off | 00000000:09:00.0 Off | N/A |

| 23% 23C P8 8W / 250W | 6MiB / 12288MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 3 NVIDIA TITAN RTX Off | 00000000:0A:00.0 Off | N/A |

| 40% 27C P8 8W / 280W | 5MiB / 24576MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 1406 G 9MiB |

| 0 N/A N/A 1863 G 4MiB |

| 1 N/A N/A 1406 G 4MiB |

| 2 N/A N/A 1406 G 4MiB |

| 3 N/A N/A 1406 G 4MiB |

+-----------------------------------------------------------------------------+위 GPU 중 3번 GPU를 가지고 test할 예정입니다.

import os

os.environ["CUDA_DEVICE_ORDER"] = "PCI_BUS_ID";

os.environ["CUDA_VISIBLE_DEVICES"] = "3";

3. Install Dependencies

# pip install pillow matplotlib

4. Importing required libraries

from __future__ import absolute_import, division, print_function, unicode_literals

import os

import time

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow import keras

from tensorflow.python.compiler.tensorrt import trt_convert as trt

from tensorflow.python.saved_model import tag_constants

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications.resnet50 import preprocess_input, decode_predictionsprint("Tensorflow version: ", tf.version.VERSION)

# check TensorRT version

print("TensorRT version: ")

!dpkg -l | grep nvinferTensorflow version: 2.8.0

TensorRT version:

ii libnvinfer-bin 8.2.4-1+cuda11.4 amd64 TensorRT binaries

ii libnvinfer-dev 8.2.4-1+cuda11.4 amd64 TensorRT development libraries and headers

ii libnvinfer-plugin-dev 8.2.4-1+cuda11.4 amd64 TensorRT plugin libraries and headers

ii libnvinfer-plugin8 8.2.4-1+cuda11.4 amd64 TensorRT plugin library

ii libnvinfer8 8.2.4-1+cuda11.4 amd64 TensorRT runtime libraries

5. Check Tensor core GPU

from tensorflow.python.client import device_lib

def check_tensor_core_gpu_present():

local_device_protos = device_lib.list_local_devices()

for line in local_device_protos:

if "compute capability" in str(line):

compute_capability = float(line.physical_device_desc.split("compute capability: ")[-1])

if compute_capability>=7.0:

return True

print("Tensor Core GPU Present:", check_tensor_core_gpu_present())

tensor_core_gpu = check_tensor_core_gpu_present()Tensor Core GPU Present: True

2022-05-01 13:35:46.097900: I tensorflow/core/platform/cpu_feature_guard.cc:152] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: SSE3 SSE4.1 SSE4.2 AVX

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2022-05-01 13:35:46.629242: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1525] Created device /device:GPU:0 with 22850 MB memory: -> device: 0, name: NVIDIA TITAN RTX, pci bus id: 0000:0a:00.0, compute capability: 7.5

2022-05-01 13:35:46.631413: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1525] Created device /device:GPU:0 with 22850 MB memory: -> device: 0, name: NVIDIA TITAN RTX, pci bus id: 0000:0a:00.0, compute capability: 7.5

6. Check Data

!mkdir ./data

!wget -O ./data/img0.JPG "https://d17fnq9dkz9hgj.cloudfront.net/breed-uploads/2018/08/siberian-husky-detail.jpg?bust=1535566590&width=630"

!wget -O ./data/img1.JPG "https://www.hakaimagazine.com/wp-content/uploads/header-gulf-birds.jpg"

!wget -O ./data/img2.JPG "https://www.artis.nl/media/filer_public_thumbnails/filer_public/00/f1/00f1b6db-fbed-4fef-9ab0-84e944ff11f8/chimpansee_amber_r_1920x1080.jpg__1920x1080_q85_subject_location-923%2C365_subsampling-2.jpg"

!wget -O ./data/img3.JPG "https://www.familyhandyman.com/wp-content/uploads/2018/09/How-to-Avoid-Snakes-Slithering-Up-Your-Toilet-shutterstock_780480850.jfrom tensorflow.keras.preprocessing import image

fig, axes = plt.subplots(nrows=2, ncols=2)

for i in range(4):

img_path = './data/img%d.JPG'%i

img = image.load_img(img_path, target_size=(224, 224))

plt.subplot(2,2,i+1)

plt.imshow(img);

plt.axis('off');

7. Load keras model and save tensorflow model

1) 기존 학습된 모델 활용하기

from tensorflow.keras.applications.resnet50 import ResNet50

model = ResNet50(weights='imagenet')for i in range(4):

img_path = './data/img%d.JPG'%i

img = image.load_img(img_path, target_size=(224, 224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

preds = model.predict(x)

# decode the results into a list of tuples (class, description, probability)

# (one such list for each sample in the batch)

print('{} - Predicted: {}'.format(img_path, decode_predictions(preds, top=3)[0]))

plt.subplot(2,2,i+1)

plt.imshow(img);

plt.axis('off');

plt.title(decode_predictions(preds, top=3)[0][0][1])./data/img0.JPG - Predicted: [('n02110185', 'Siberian_husky', 0.55681264), ('n02109961', 'Eskimo_dog', 0.41662714), ('n02110063', 'malamute', 0.021314112)]

./data/img1.JPG - Predicted: [('n01820546', 'lorikeet', 0.3011734), ('n01537544', 'indigo_bunting', 0.1698214), ('n01828970', 'bee_eater', 0.16135585)]

./data/img2.JPG - Predicted: [('n02481823', 'chimpanzee', 0.5092327), ('n02480495', 'orangutan', 0.16085911), ('n02480855', 'gorilla', 0.15105435)]

./data/img3.JPG - Predicted: [('n01729977', 'green_snake', 0.43619812), ('n03627232', 'knot', 0.088651404), ('n01749939', 'green_mamba', 0.08061639)]

# Save the entire model as a SavedModel.

model.save('resnet50_saved_model')

!saved_model_cli show --all --dir resnet50_saved_model2) custom으로 학습한 keras 모델 활용하기

from tensorflow.keras.models import load_model

model = load_model('my_model.h5')

model.summary()

model.save('my_tf_model')

!saved_model_cli show --all --dir my_tf_model

8. Benchmarking Inference with native TF2.x saved model

1) 기존 학습된 모델 활용하기

model = tf.keras.models.load_model('resnet50_saved_model')batch_size = 8

batched_input = np.zeros((batch_size, 224, 224, 3), dtype=np.float32)

for i in range(batch_size):

img_path = './data/img%d.JPG' % (i % 4)

img = image.load_img(img_path, target_size=(224, 224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

batched_input[i, :] = x

batched_input = tf.constant(batched_input)

print('batched_input shape: ', batched_input.shape)batched_input shape: (8, 224, 224, 3)# Benchmarking throughput

N_warmup_run = 50

N_run = 1000

elapsed_time = []

for i in range(N_warmup_run):

preds = model.predict(batched_input)

for i in range(N_run):

start_time = time.time()

preds = model.predict(batched_input)

end_time = time.time()

elapsed_time = np.append(elapsed_time, end_time - start_time)

if i % 50 == 0:

print('Step {}: {:4.1f}ms'.format(i, (elapsed_time[-50:].mean()) * 1000))

print('Throughput: {:.0f} images/s'.format(N_run * batch_size / elapsed_time.sum()))Step 0: 48.3ms

Step 50: 49.6ms

Step 100: 55.8ms

Step 150: 50.4ms

Step 200: 50.0ms

Step 250: 49.4ms

Step 300: 49.3ms

Step 350: 49.2ms

Step 400: 49.3ms

Step 450: 48.9ms

Step 500: 48.9ms

Step 550: 48.9ms

Step 600: 48.9ms

Step 650: 49.0ms

Step 700: 48.9ms

Step 750: 49.6ms

Step 800: 48.9ms

Step 850: 48.9ms

Step 900: 48.9ms

Step 950: 49.8ms

Throughput: 161 images/s2) custom으로 학습한 keras 모델 활용하기

model = tf.keras.models.load_model('my_tf_model')이후 custom에 대해서 model 파일을 저장한 위치만 변경해서 진행하면 되며, 이후 생략합니다.

9. Convert TF-TRT FP32 model

print('Converting to TF-TRT FP32...')

converter = trt.TrtGraphConverterV2(input_saved_model_dir='resnet50_saved_model',

precision_mode=trt.TrtPrecisionMode.FP32,

max_workspace_size_bytes=8000000000)

converter.convert()

converter.save(output_saved_model_dir='resnet50_saved_model_TFTRT_FP32')

print('Done Converting to TF-TRT FP32')

!saved_model_cli show --all --dir resnet50_saved_model_TFTRT_FP32def predict_tftrt(input_saved_model):

"""Runs prediction on a single image and shows the result.

input_saved_model (string): Name of the input model stored in the current dir

"""

img_path = './data/img0.JPG' # Siberian_husky

img = image.load_img(img_path, target_size=(224, 224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

x = tf.constant(x)

saved_model_loaded = tf.saved_model.load(input_saved_model, tags=[tag_constants.SERVING])

signature_keys = list(saved_model_loaded.signatures.keys())

print(signature_keys)

infer = saved_model_loaded.signatures['serving_default']

print(infer.structured_outputs)

labeling = infer(x)

preds = labeling['predictions'].numpy()

print('{} - Predicted: {}'.format(img_path, decode_predictions(preds, top=3)[0]))

plt.subplot(2,2,1)

plt.imshow(img);

plt.axis('off');

plt.title(decode_predictions(preds, top=3)[0][0][1])

def benchmark_tftrt(input_saved_model):

saved_model_loaded = tf.saved_model.load(input_saved_model, tags=[tag_constants.SERVING])

infer = saved_model_loaded.signatures['serving_default']

N_warmup_run = 50

N_run = 1000

elapsed_time = []

for i in range(N_warmup_run):

labeling = infer(batched_input)

for i in range(N_run):

start_time = time.time()

labeling = infer(batched_input)

end_time = time.time()

elapsed_time = np.append(elapsed_time, end_time - start_time)

if i % 50 == 0:

print('Step {}: {:4.1f}ms'.format(i, (elapsed_time[-50:].mean()) * 1000))

print('Throughput: {:.0f} images/s'.format(N_run * batch_size / elapsed_time.sum()))predict_tftrt('resnet50_saved_model_TFTRT_FP32')./data/img0.JPG - Predicted: [('n02110185', 'Siberian_husky', 0.55681354), ('n02109961', 'Eskimo_dog', 0.41662621), ('n02110063', 'malamute', 0.021314066)]

benchmark_tftrt('resnet50_saved_model_TFTRT_FP32')Step 0: 5.7ms

Step 50: 5.7ms

Step 100: 5.7ms

Step 150: 5.7ms

Step 200: 5.7ms

Step 250: 5.7ms

Step 300: 5.7ms

Step 350: 5.7ms

Step 400: 5.7ms

Step 450: 5.7ms

Step 500: 5.7ms

Step 550: 5.7ms

Step 600: 5.7ms

Step 650: 5.7ms

Step 700: 5.8ms

Step 750: 5.7ms

Step 800: 5.7ms

Step 850: 5.7ms

Step 900: 5.7ms

Step 950: 5.7ms

Throughput: 1398 images/s

10. Convert TF-TRT FP16 model

print('Converting to TF-TRT FP16...')

converter = trt.TrtGraphConverterV2(input_saved_model_dir='resnet50_saved_model',

precision_mode=trt.TrtPrecisionMode.FP16,

max_workspace_size_bytes=8000000000)

converter.convert()

converter.save(output_saved_model_dir='resnet50_saved_model_TFTRT_FP16')

print('Done Converting to TF-TRT FP16')predict_tftrt('resnet50_saved_model_TFTRT_FP16')./data/img0.JPG - Predicted: [('n02110185', 'Siberian_husky', 0.55445474), ('n02109961', 'Eskimo_dog', 0.41852438), ('n02110063', 'malamute', 0.021667156)]

benchmark_tftrt('resnet50_saved_model_TFTRT_FP16')Step 0: 1.8ms

Step 50: 1.8ms

Step 100: 1.8ms

Step 150: 1.8ms

Step 200: 1.8ms

Step 250: 1.8ms

Step 300: 1.8ms

Step 350: 1.8ms

Step 400: 1.8ms

Step 450: 1.8ms

Step 500: 1.8ms

Step 550: 1.8ms

Step 600: 1.8ms

Step 650: 1.8ms

Step 700: 1.8ms

Step 750: 1.8ms

Step 800: 1.8ms

Step 850: 1.8ms

Step 900: 1.8ms

Step 950: 1.8ms

Throughput: 4403 images/s

11. Convert TF-TRT INT8 model

Creating TF-TRT INT8 model requires a small calibration dataset. This data set ideally should represent the test data in production well, and will be used to create a value histogram for each layer in the neural network for effective 8-bit quantization.

import os

os.kill(os.getpid(), 9)from __future__ import absolute_import, division, print_function, unicode_literals

import os

import time

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow import keras

from tensorflow.python.compiler.tensorrt import trt_convert as trt

from tensorflow.python.saved_model import tag_constants

from tensorflow.keras.applications.resnet50 import ResNet50

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications.resnet50 import preprocess_input, decode_predictionsbatch_size = 8

batched_input = np.zeros((batch_size, 224, 224, 3), dtype=np.float32)

for i in range(batch_size):

img_path = './data/img%d.JPG' % (i % 4)

img = image.load_img(img_path, target_size=(224, 224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

batched_input[i, :] = x

batched_input = tf.constant(batched_input)

print('batched_input shape: ', batched_input.shape)batched_input shape: (8, 224, 224, 3)def calibration_input_fn():

yield (batched_input, )

print('Converting to TF-TRT INT8...')

converter = trt.TrtGraphConverterV2(input_saved_model_dir='resnet50_saved_model',

precision_mode=trt.TrtPrecisionMode.INT8,

max_workspace_size_bytes=8000000000)

converter.convert(calibration_input_fn=calibration_input_fn)

converter.save(output_saved_model_dir='resnet50_saved_model_TFTRT_INT8')

print('Done Converting to TF-TRT INT8')def predict_tftrt(input_saved_model):

"""Runs prediction on a single image and shows the result.

input_saved_model (string): Name of the input model stored in the current dir

"""

img_path = './data/img0.JPG' # Siberian_husky

img = image.load_img(img_path, target_size=(224, 224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

x = tf.constant(x)

saved_model_loaded = tf.saved_model.load(input_saved_model, tags=[tag_constants.SERVING])

signature_keys = list(saved_model_loaded.signatures.keys())

print(signature_keys)

infer = saved_model_loaded.signatures['serving_default']

print(infer.structured_outputs)

labeling = infer(x)

preds = labeling['predictions'].numpy()

print('{} - Predicted: {}'.format(img_path, decode_predictions(preds, top=3)[0]))

plt.subplot(2,2,1)

plt.imshow(img);

plt.axis('off');

plt.title(decode_predictions(preds, top=3)[0][0][1])

def benchmark_tftrt(input_saved_model):

saved_model_loaded = tf.saved_model.load(input_saved_model, tags=[tag_constants.SERVING])

infer = saved_model_loaded.signatures['serving_default']

N_warmup_run = 50

N_run = 1000

elapsed_time = []

for i in range(N_warmup_run):

labeling = infer(batched_input)

for i in range(N_run):

start_time = time.time()

labeling = infer(batched_input)

#prob = labeling['probs'].numpy()

end_time = time.time()

elapsed_time = np.append(elapsed_time, end_time - start_time)

if i % 50 == 0:

print('Step {}: {:4.1f}ms'.format(i, (elapsed_time[-50:].mean()) * 1000))

print('Throughput: {:.0f} images/s'.format(N_run * batch_size / elapsed_time.sum()))predict_tftrt('resnet50_saved_model_TFTRT_INT8')./data/img0.JPG - Predicted: [('n02110185', 'Siberian_husky', 0.5527344), ('n02109961', 'Eskimo_dog', 0.42358398), ('n02110063', 'malamute', 0.020431519)]

benchmark_tftrt('resnet50_saved_model_TFTRT_INT8')Step 0: 1.5ms

Step 50: 1.4ms

Step 100: 1.2ms

Step 150: 1.2ms

Step 200: 1.3ms

Step 250: 1.4ms

Step 300: 0.9ms

Step 350: 1.0ms

Step 400: 1.1ms

Step 450: 1.1ms

Step 500: 1.1ms

Step 550: 1.1ms

Step 600: 1.1ms

Step 650: 1.1ms

Step 700: 1.1ms

Step 750: 1.1ms

Step 800: 1.1ms

Step 850: 1.1ms

Step 900: 1.1ms

Step 950: 1.1ms

Throughput: 7087 images/s

12. Conclusion

| ResNet50 | Original | FP32 | FP16 | INT8 |

| FPS | 161 | 1398 | 4403 | 7087 |

ref.

https://catalog.ngc.nvidia.com/orgs/nvidia/containers/tensorflow

TensorFlow | NVIDIA NGC

TensorFlow is an open source platform for machine learning. It provides comprehensive tools and libraries in a flexible architecture allowing easy deployment across a variety of platforms and devices.

catalog.ngc.nvidia.com

GitHub - tensorflow/tensorrt: TensorFlow/TensorRT integration

TensorFlow/TensorRT integration. Contribute to tensorflow/tensorrt development by creating an account on GitHub.

github.com

https://hagler.tistory.com/188

Keras Model을 TensorRT로 변환하기

시작 Jetson nano에서 Model 동작 속도를 높이기 위해 TensorRT로 최적화 하는 방법을 선택하였다. 로컬에 TensorRT 환경 구축을 하지 않아도 Google Colab을 통해 TensorRT를 사용할수 있다. Keras로 만든 Model..

hagler.tistory.com